Linear subspace

|

| One-dimensional subspaces in the two-dimensional vector space over the finite field F5. The origin (0, 0), marked with green circles, belongs to any of six 1-subspaces, while each of 24 remaining points belongs to exactly one; a property which holds for 1-subspaces over any field and in all dimensions. All F52 (i.e. a 5 × 5 square) is pictured four times for a better visualization |

In linear algebra and related fields of mathematics, a linear subspace, also known as a vector subspace, or, in the older literature, a linear manifold,[1][2] is a vector space that is a subset of some other (higher-dimension) vector space. A linear subspace is usually called simply a subspace when the context serves to distinguish it from other kinds of subspaces.

Definition and useful characterization of subspace

Let K be a field (such as the real numbers), and let V be a vector space over K. As usual, we call elements of V vectors and call elements of K scalars. Ignoring the full extent of mathematical generalization, scalars can be understood simply as numbers. Suppose that W is a subset of V. If W is a vector space itself (which means that it is closed under operations of addition and scalar multiplication), with the same vector space operations as V has, then W is a subspace of V.

To use this definition, we don't have to prove that all the properties of a vector space hold for W. Instead, we can prove a theorem that gives us an easier way to show that a subset of a vector space is a subspace.

Theorem: Let V be a vector space over the field K, and let W be a subset of V. Then W is a subspace if and only if W satisfies the following three conditions:

- The zero vector, 0, is in W.

- If u and v are elements of W, then the sum u + v is an element of W;

- If u is an element of W and c is a scalar from K, then the product cu is an element of W.

Proof: Firstly, property 1 ensures W is nonempty. Looking at the definition of a vector space, we see that properties 2 and 3 above assure closure of W under addition and scalar multiplication, so the vector space operations are well defined. Since elements of W are necessarily elements of V, axioms 1, 2 and 5–8 of a vector space are satisfied. By the closure of W under scalar multiplication (specifically by 0 and −1), the vector space's definitional axiom identity element of addition and axiom inverse element of addition are satisfied.

Conversely, if W is a subspace of V, then W is itself a vector space under the operations induced by V, so properties 2 and 3 are satisfied. By property 3, −w is in W whenever w is, and it follows that W is closed under subtraction as well. Since W is nonempty, there is an element x in W, and is in W, so property 1 is satisfied. One can also argue that since W is nonempty, there is an element x in W, and 0 is in the field K so and therefore property 1 is satisfied.

Examples

Example I: Let the field K be the set R of real numbers, and let the vector space V be the real coordinate space R3. Take W to be the set of all vectors in V whose last component is 0. Then W is a subspace of V.

Proof:

- Given u and v in W, then they can be expressed as u = (u1, u2, 0) and v = (v1, v2, 0). Then u + v = (u1+v1, u2+v2, 0+0) = (u1+v1, u2+v2, 0). Thus, u + v is an element of W, too.

- Given u in W and a scalar c in R, if u = (u1, u2, 0) again, then cu = (cu1, cu2, c0) = (cu1, cu2,0). Thus, cu is an element of W too.

Example II: Let the field be R again, but now let the vector space be the Cartesian plane R2. Take W to be the set of points (x, y) of R2 such that x = y. Then W is a subspace of R2.

Proof:

- Let p = (p1, p2) and q = (q1, q2) be elements of W, that is, points in the plane such that p1 = p2 and q1 = q2. Then p + q = (p1+q1, p2+q2); since p1 = p2 and q1 = q2, then p1 + q1 = p2 + q2, so p + q is an element of W.

- Let p = (p1, p2) be an element of W, that is, a point in the plane such that p1 = p2, and let c be a scalar in R. Then cp = (cp1, cp2); since p1 = p2, then cp1 = cp2, so cp is an element of W.

In general, any subset of the real coordinate space Rn that is defined by a system of homogeneous linear equations will yield a subspace. (The equation in example I was z = 0, and the equation in example II was x = y.) Geometrically, these subspaces are points, lines, planes, and so on, that pass through the point 0.

Examples related to calculus

Example III: Again take the field to be R, but now let the vector space V be the set RR of all functions from R to R. Let C(R) be the subset consisting of continuous functions. Then C(R) is a subspace of RR.

Proof:

- We know from calculus that 0 ∈ C(R) ⊂ RR.

- We know from calculus that the sum of continuous functions is continuous.

- Again, we know from calculus that the product of a continuous function and a number is continuous.

Example IV: Keep the same field and vector space as before, but now consider the set Diff(R) of all differentiable functions. The same sort of argument as before shows that this is a subspace too.

Examples that extend these themes are common in functional analysis.

Properties of subspaces

A way to characterize subspaces is that they are closed under linear combinations. That is, a nonempty set W is a subspace if and only if every linear combination of (finitely many) elements of W also belongs to W. Conditions 2 and 3 for a subspace are simply the most basic kinds of linear combinations.

In a topological vector space X, a subspace W need not be closed in general, but a finite-dimensional subspace is always closed.[3] The same is true for subspaces of finite codimension, i.e. determined by a finite number of continuous linear functionals.

Descriptions

Descriptions of subspaces include the solution set to a homogeneous system of linear equations, the subset of Euclidean space described by a system of homogeneous linear parametric equations, the span of a collection of vectors, and the null space, column space, and row space of a matrix. Geometrically (especially, over the field of real numbers and its subfields), a subspace is a flat in an n-space that passes through the origin.

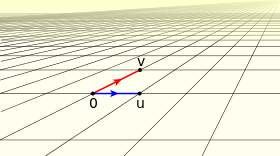

A natural description of an 1-subspace is the scalar multiplication of one non-zero vector v to all possible scalar values. 1-subspaces specified by two vectors are equal if and only if one vector can be obtained from another with scalar multiplication:

This idea is generalized for higher dimensions with linear span, but criteria for equality of k-spaces specified by sets of k vectors are not so simple.

A dual description is provided with linear functionals (usually implemented as linear equations). One non-zero linear functional F specifies its kernel subspace F = 0 of codimension 1. Subspaces of codimension 1 specified by two linear functionals are equal if and only if one functional can be obtained from another with scalar multiplication (in the dual space):

It is generalized for higher codimensions with a system of equations. The following two subsections will present this latter description in details, and the remaining four subsections further describe the idea of linear span.

Systems of linear equations

The solution set to any homogeneous system of linear equations with n variables is a subspace in the coordinate space Kn:

For example (over real or rational numbers), the set of all vectors (x, y, z) satisfying the equations

is a one-dimensional subspace. More generally, that is to say that given a set of n independent functions, the dimension of the subspace in Kk will be the dimension of the null set of A, the composite matrix of the n functions.

Null space of a matrix

In a finite-dimensional space, a homogeneous system of linear equations can be written as a single matrix equation:

The set of solutions to this equation is known as the null space of the matrix. For example, the subspace described above is the null space of the matrix

Every subspace of Kn can be described as the null space of some matrix (see algorithms, below).

Linear parametric equations

The subset of Kn described by a system of homogeneous linear parametric equations is a subspace:

For example, the set of all vectors (x, y, z) parameterized by the equations

is a two-dimensional subspace of K3, if K is a number field (such as real or rational numbers).[4]

Span of vectors

In linear algebra, the system of parametric equations can be written as a single vector equation:

The expression on the right is called a linear combination of the vectors (2, 5, −1) and (3, −4, 2). These two vectors are said to span the resulting subspace.

In general, a linear combination of vectors v1, v2, ... , vk is any vector of the form

The set of all possible linear combinations is called the span:

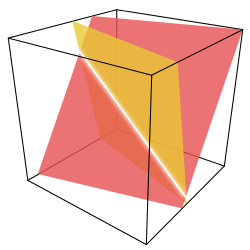

If the vectors v1, ... , vk have n components, then their span is a subspace of Kn. Geometrically, the span is the flat through the origin in n-dimensional space determined by the points v1, ... , vk.

- Example

- The xz-plane in R3 can be parameterized by the equations

- As a subspace, the xz-plane is spanned by the vectors (1, 0, 0) and (0, 0, 1). Every vector in the xz-plane can be written as a linear combination of these two:

- Geometrically, this corresponds to the fact that every point on the xz-plane can be reached from the origin by first moving some distance in the direction of (1, 0, 0) and then moving some distance in the direction of (0, 0, 1).

Column space and row space

A system of linear parametric equations in a finite-dimensional space can also be written as a single matrix equation:

In this case, the subspace consists of all possible values of the vector x. In linear algebra, this subspace is known as the column space (or image) of the matrix A. It is precisely the subspace of Kn spanned by the column vectors of A.

The row space of a matrix is the subspace spanned by its row vectors. The row space is interesting because it is the orthogonal complement of the null space (see below).

Independence, basis, and dimension

In general, a subspace of Kn determined by k parameters (or spanned by k vectors) has dimension k. However, there are exceptions to this rule. For example, the subspace of K3 spanned by the three vectors (1, 0, 0), (0, 0, 1), and (2, 0, 3) is just the xz-plane, with each point on the plane described by infinitely many different values of t1, t2, t3.

In general, vectors v1, ... , vk are called linearly independent if

for (t1, t2, ... , tk) ≠ (u1, u2, ... , uk).[5] If v1, ..., vk are linearly independent, then the coordinates t1, ..., tk for a vector in the span are uniquely determined.

A basis for a subspace S is a set of linearly independent vectors whose span is S. The number of elements in a basis is always equal to the geometric dimension of the subspace. Any spanning set for a subspace can be changed into a basis by removing redundant vectors (see algorithms, below).

- Example

- Let S be the subspace of R4 defined by the equations

- Then the vectors (2, 1, 0, 0) and (0, 0, 5, 1) are a basis for S. In particular, every vector that satisfies the above equations can be written uniquely as a linear combination of the two basis vectors:

- The subspace S is two-dimensional. Geometrically, it is the plane in R4 passing through the points (0, 0, 0, 0), (2, 1, 0, 0), and (0, 0, 5, 1).

Operations and relations on subspaces

Inclusion

The set-theoretical inclusion binary relation specifies a partial order on the set of all subspaces (of any dimension).

A subspace cannot lie in any subspace of lesser dimension. If dim U = k, a finite number, and U ⊂ W, then dim W = k if and only if U = W.

Intersection

Given subspaces U and W of a vector space V, then their intersection U ∩ W := {v ∈ V : v is an element of both U and W} is also a subspace of V.[6]

Proof:

- Let v and w be elements of U ∩ W. Then v and w belong to both U and W. Because U is a subspace, then v + w belongs to U. Similarly, since W is a subspace, then v + w belongs to W. Thus, v + w belongs to U ∩ W.

- Let v belong to U ∩ W, and let c be a scalar. Then v belongs to both U and W. Since U and W are subspaces, cv belongs to both U and W.

- Since U and W are vector spaces, then 0 belongs to both sets. Thus, 0 belongs to U ∩ W.

For every vector space V, the set {0} and V itself are subspaces of V.[7]

Sum

If U and W are subspaces, their sum is the subspace

For example, the sum of two lines is the plane that contains them both. The dimension of the sum satisfies the inequality

Here the minimum only occurs if one subspace is contained in the other, while the maximum is the most general case. The dimension of the intersection and the sum are related:

Lattice of subspaces

The operations intersection and sum make the set of all subspaces a bounded modular lattice, where the {0} subspace, the least element, is an identity element of the sum operation, and the identical subspace V, the greatest element, is an identity element of the intersection operation.

Other

If V is an inner product space, then the orthogonal complement ⊥ of any subspace of V is again a subspace. This operation, understood as negation (¬), makes the lattice of subspaces a (possibly infinite) orthocomplemented lattice (it is not a distributive lattice).

In a pseudo-Euclidean space there are orthogonal complements too, but such operation does not form a Boolean algebra (nor a Heyting algebra) because of null subspaces, for which N ∩ N⊥ = N ≠ {0}. The same case presents the ⊥ operation in symplectic vector spaces.

Algorithms

Most algorithms for dealing with subspaces involve row reduction. This is the process of applying elementary row operations to a matrix until it reaches either row echelon form or reduced row echelon form. Row reduction has the following important properties:

- The reduced matrix has the same null space as the original.

- Row reduction does not change the span of the row vectors, i.e. the reduced matrix has the same row space as the original.

- Row reduction does not affect the linear dependence of the column vectors.

Basis for a row space

- Input An m × n matrix A.

- Output A basis for the row space of A.

- Use elementary row operations to put A into row echelon form.

- The nonzero rows of the echelon form are a basis for the row space of A.

See the article on row space for an example.

If we instead put the matrix A into reduced row echelon form, then the resulting basis for the row space is uniquely determined. This provides an algorithm for checking whether two row spaces are equal and, by extension, whether two subspaces of Kn are equal.

Subspace membership

- Input A basis {b1, b2, ..., bk} for a subspace S of Kn, and a vector v with n components.

- Output Determines whether v is an element of S

- Create a (k + 1) × n matrix A whose rows are the vectors b1, ... , bk and v.

- Use elementary row operations to put A into row echelon form.

- If the echelon form has a row of zeroes, then the vectors {b1, ..., bk, v} are linearly dependent, and therefore v ∈ S .

Basis for a column space

- Input An m × n matrix A

- Output A basis for the column space of A

- Use elementary row operations to put A into row echelon form.

- Determine which columns of the echelon form have pivots. The corresponding columns of the original matrix are a basis for the column space.

See the article on column space for an example.

This produces a basis for the column space that is a subset of the original column vectors. It works because the columns with pivots are a basis for the column space of the echelon form, and row reduction does not change the linear dependence relationships between the columns.

Coordinates for a vector

- Input A basis {b1, b2, ..., bk} for a subspace S of Kn, and a vector v ∈ S

- Output Numbers t1, t2, ..., tk such that v = t1b1 + ··· + tkbk

- Create an augmented matrix A whose columns are b1,...,bk , with the last column being v.

- Use elementary row operations to put A into reduced row echelon form.

- Express the final column of the reduced echelon form as a linear combination of the first k columns. The coefficients used are the desired numbers t1, t2, ..., tk. (These should be precisely the first k entries in the final column of the reduced echelon form.)

If the final column of the reduced row echelon form contains a pivot, then the input vector v does not lie in S.

Basis for a null space

- Input An m × n matrix A.

- Output A basis for the null space of A

- Use elementary row operations to put A in reduced row echelon form.

- Using the reduced row echelon form, determine which of the variables x1, x2, ..., xn are free. Write equations for the dependent variables in terms of the free variables.

- For each free variable xi, choose a vector in the null space for which xi = 1 and the remaining free variables are zero. The resulting collection of vectors is a basis for the null space of A.

See the article on null space for an example.

Basis for the sum and intersection of two subspaces

Given two subspaces U and W of V, a basis of the sum and the intersection can be calculated using the Zassenhaus algorithm

Equations for a subspace

- Input A basis {b1, b2, ..., bk} for a subspace S of Kn

- Output An (n − k) × n matrix whose null space is S.

- Create a matrix A whose rows are b1, b2, ..., bk.

- Use elementary row operations to put A into reduced row echelon form.

- Let c1, c2, ..., cn be the columns of the reduced row echelon form. For each column without a pivot, write an equation expressing the column as a linear combination of the columns with pivots.

- This results in a homogeneous system of n − k linear equations involving the variables c1,...,cn. The (n − k) × n matrix corresponding to this system is the desired matrix with nullspace S.

- Example

- If the reduced row echelon form of A is

- then the column vectors c1, ..., c6 satisfy the equations

- It follows that the row vectors of A satisfy the equations

- In particular, the row vectors of A are a basis for the null space of the corresponding matrix.

See also

Textbooks

- Axler, Sheldon Jay (1997), Linear Algebra Done Right (2nd ed.), Springer-Verlag, ISBN 0-387-98259-0

- Lay, David C. (August 22, 2005), Linear Algebra and Its Applications (3rd ed.), Addison Wesley, ISBN 978-0-321-28713-7

- Meyer, Carl D. (February 15, 2001), Matrix Analysis and Applied Linear Algebra, Society for Industrial and Applied Mathematics (SIAM), ISBN 978-0-89871-454-8

- Poole, David (2006), Linear Algebra: A Modern Introduction (2nd ed.), Brooks/Cole, ISBN 0-534-99845-3

- Anton, Howard (2005), Elementary Linear Algebra (Applications Version) (9th ed.), Wiley International

- Leon, Steven J. (2006), Linear Algebra With Applications (7th ed.), Pearson Prentice Hall

External links

- "Vector subspace". PlanetMath..

- Gilbert Strang, MIT Linear Algebra Lecture on the Four Fundamental Subspaces at Google Video, from MIT OpenCourseWare

Notes

- ↑ Halmos, P. R. (1942). Finite-Dimensional Vector Spaces. Princeton, NJ: Princeton University Press. p. 14. ISBN 978-1-61427-281-6.

- ↑ The term linear manifold also has two other related but distinct definitions: (i) a subspace of a Hilbert space, closed under addition and scalar multiplication, or (ii) a subset of a vector space consisting of the vectors of a linear subspace shifted by a constant vector, i.e. the subset L + v of vector space V, where L is a linear subspace of V and v is a vector in V.

- ↑ See Paul DuChateau. "Basic Facts About Hilbert Space" (PDF). Retrieved September 17, 2012. for Hilbert spaces

- ↑ Generally, K can be any field of such characteristic that the given integer matrix has the appropriate rank in it. All fields include integers, but some integers may equal to zero in some fields.

- ↑ This definition is often stated differently: vectors v1, ..., vk are linearly independent if t1v1 + ··· + tkvk ≠ 0 for (t1, t2, ..., tk) ≠ (0, 0, ..., 0). The two definitions are equivalent.

- ↑ Nering (1970, p. 21)

- ↑ Nering (1970, p. 20)

- ↑ Nering (1970, p. 21)

- ↑ Nering (1970, p. 22)

References

- Nering, Evar D. (1970), Linear Algebra and Matrix Theory (2nd ed.), New York: Wiley, LCCN 76091646